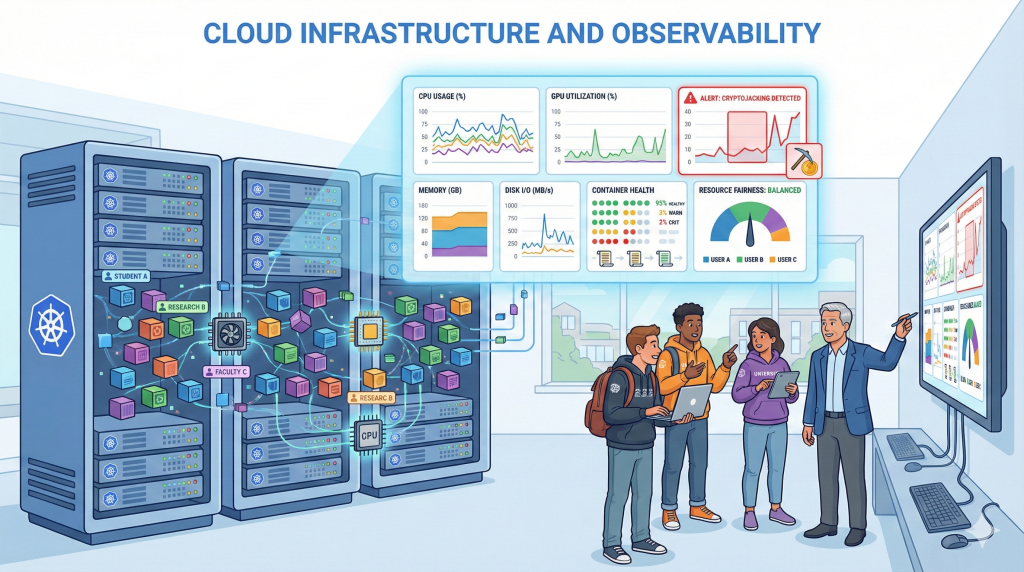

We build and operate Kubernetes‑based teaching and research clusters where many users share CPU, GPU, and storage resources. Our work focuses on making these clusters fair and practical in real departments: analyzing CPU throttling and scheduling policies, tuning Ceph‑backed storage and LevelDB/RocksDB I/O on containers, and designing multi‑user authentication and access control so students can safely run their own workloads.

On top of this infrastructure we develop observability and security pipelines. Cluster‑wide monitoring collects metrics, logs, and traces with low overhead, enabling fairness‑oriented telemetry across shared resource pools and early detection of anomalies such as CryptoJacking containers or abusive jobs. The overall theme is to create observable, secure, and student‑friendly cloud platforms that double as both research testbeds and teaching environments.